Sampling for Proximity and Availability

Alex Evans, Nicolas Mohnblatt and Guillermo Angeris

This article is cross-posted from the Bain Capital Crypto Insights.

In blockchains, nodes can ensure that the chain is valid without trusting anyone, not even the validators or miners. Early blockchain design can achieve this by having each of these nodes download and re-execute all transactions itself. The inefficiency of this approach motivated the adoption of fraud and validity proofs. While these proofs provide assurances that the underlying state transitions of the blockchain were computed correctly, malicious block producers may still withhold the data required to compute the latest state. (In other words, while a node without this data may ensure that every change performed to the state was valid—since the proofs verify—the node may not be able to know what the underlying state actually is.) Such an attack could prevent users from composing valid transactions (e.g., to withdraw assets from some pool) or could prevent other nodes from constructing proofs of their own. The simplest way to ensure that the data needed to construct the underlying state can be downloaded is, of course, to try and download it. This is the approach most current blockchains take. The downside of this approach is that, as usage increases, every node must download all the data, which, in turn, puts strain on the network and its available bandwidth.

Data availability sampling. In response, [ASB181]1 introduced data availability sampling (DAS). This technique allows resource-constrained nodes (referred to as light nodes) to probabilistically verify the availability of data without downloading the entire block. That work roughly shows that, under some trust assumptions, if (a) the block data that we wish to make public is encoded via some error correcting code and (b) if enough people are sampling small parts of the encoded data, then the data must be available in that either it can be directly downloaded or, failing that, it can be reconstructed from the samples that have been collected by others in the network. This sampling procedure can ensure the data is available if two basic properties, which we describe next, hold.

Requirements. First, for DAS to work, there must be some way of guaranteeing that the data that was sampled is correctly encoded. Second, there must be ‘enough’ nodes actively sampling. In particular, the number must be chosen to ensure that these nodes, collectively, have enough symbols of the encoding to reconstruct the original data. DAS protocols in production today (such as those of [ASB18]) handle the former either through fraud-proofs or through KZG commitments. Fraud proofs are extremely efficient for the encoder but impose latency as well as an additional trust assumption: each light node is assumed to be connected to at least one honest full node. KZG commitments obviate this trust assumption, but require a trusted setup and are very expensive to compute, at least relative to computing the encoding itself. (Which is roughly the cost of the fraud-proof based encoding.)

Hashing-based proofs. Recent excellent works [HSW2322, HSW2433] have pointed to a third potential approach: instead of using KZG, it is possible to adapt computationally efficient hashing-based proofs such as those of [AHIV174]4 and [BBHR5]5 to establish that the encoding has been correctly computed. While these constructions have a number of desirable properties, including more efficient provers, weaker cryptographic assumptions, and no trusted setup, the constructions do feature large commitment sizes. As this commitment needs to be downloaded by all light nodes, the resulting overhead makes these constructions impractical.

This post. In this post, we’ll discuss a simple way to significantly reduce this overhead. We argue that if enough samples are requested and verified by a light node during DAS, these samples can also be used to ensure correctness of the encoding. We show that merging samples to establish both availability and integrity of the encoding results in more efficient DAS constructions.

Sampling for both proximity and availability

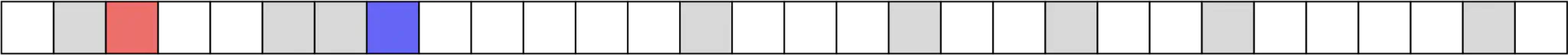

FRIDA construction. In FRIDA, the prover first encodes some data using a Reed–Solomon code and commits to the resulting entries of the vector via a Merkle tree commitment. The prover then uses the proving algorithm of the FRI protocol to produce a non-interactive proof that this vector is indeed within unique decoding of a Reed–Solomon codeword (a ‘proof of proximity’). The proof includes $L$ queries of the original vector on which the FRI verification procedure is run, where $L$ is chosen to achieve a given security level. (For the those less familiar with FRI, the exact details of this procedure are not important, though we will reference $L$ later.) The resulting proof (with the $L$ queries) is shared with light nodes as part of the commitment to the data. Each light node downloads the commitment, verifies the non-interactive proof, and makes $Q$ additional (interactive) random queries which are used as samples in the DAS scheme. An important observation of FRIDA is that running the FRI verification procedure on these additional queries implies that these received symbols correspond exactly to symbols of the closest codeword (which we have just proved exists via the initial $L$ queries). To summarize, the non-interactive proof of proximity (which contains $L$ queries) proves to light nodes that the vector the prover committed to is close to a unique Reed–Solomon codeword. These $L$ queries are the same for all nodes. Running the FRI verification procedure for the $Q$ additional queries proves to nodes that (verified) responses their specific queries, which are sampled independently and randomly across nodes, are symbols of that same (unique) codeword. As a result, one can decode the data by assembling enough queries with proofs, as required for a secure DAS scheme. The sketch below summarizes this protocol for $N=2$ light nodes making $Q=1$ queries each with $L=9$ non-interactive queries appended to the commitment. Each light node has to download $L+Q=10$ samples in total, or $1/3$ of the encoded data. (Note that at most two of these samples can be unique, since the $L$ samples are the same for both nodes.) If we’re using a rate of $1/2$ and a message of size $15$, the nodes need at least $15$ samples to decode the message. In other words, if these nodes put their samples together, they will not ever have enough data to be able to reconstruct the message, unless they request additional samples. (Or, alternatively, assume more nodes are also sampling.)

Leveraging interaction. In a simple amendment to this construction, we can have each light node interactively request and run FRI verification on an independent set of $L$ samples, instead of sending the same non-interactive proof of size $L$ to each node. (The parameter $Q$ is ignored in this setting.) This fully interactive procedure corresponds to running the query phase of FRI with interactive randomness, which is no less secure for the light node. In this case, the two nodes will, with high probability ($>99%$, by choice of $L$) sample enough data ($\ge 15$ unique squares) to decode. In this second sketch, purple squares correspond to unlucky entries that both nodes redundantly sampled. The ‘bad’ case where they sample at least nine such redundant squares occurs with tiny probability, roughly $2^{-28}$, as opposed to probability $1$ when using non-interactive randomness. In other words, the grey (non-interactive) samples are ‘pure overhead’ in that they do not provide additional information to the network (i.e., information that can help reconstruct the original message) after the first node downloads them.

Efficiency. This simple change can make the resulting DAS scheme much more efficient. If we use $80$ bits of security, as in the original FRIDA paper, we require $L=128$. For the same level of security, a scheme that makes $128+1$ queries interactively requires two orders of magnitude fewer light nodes than one where each node issues one sample in addition to downloading a non-interactive proof with $L=128$. In short, sampling for proximity and availability in the same protocol significantly improves efficiency.

Comparisons. There is one tradeoff. In the interactive case, nodes must sample without replacement to match standard FRI security analyses. In seeking to define simple, modular primitives for DAS, the constructions in [HSW23, HSW24] allow for more flexibility in the sampling strategy of nodes. However, for practical protocols, we suspect the efficiency gained is worth the restriction.

Future work. While we’ve shown that interactive queries increase DAS efficiency when using FRIDA, significant overheads remains. Standard FRI verification utilizes correlated queries across rounds. Since queries to a given FRI oracle perfectly correlate with queries in the original oracle, they seem to yield no useful information to the network. A question in this case, remains: is it possible to have a protocol whose proofs all yield information that can be used to both prove a correct encoding and whose contents can be used to decode the original data?

Stay tuned for the next post.

Acknowledgements

We’d like to thank Kobi Gurkan, John Adler, Nashqueue, and Sanaz Taheri for useful discussions regarding data availability, its requirements, and possible constructions. (On the other hand, any mistakes or silliness in this post are completely ours.)

-

[ASB18] Mustafa Al-Bassam, Alberto Sonnino, and Vitalik Buterin. Fraud proofs: Maximising light client security and scaling blockchains with dishonest majorities. CoRR, abs/1809.09044, 2018. ↩︎

-

[HSW23] Mathias Hall-Andersen, Mark Simkin, and Benedikt Wagner. Foundations of data availability sampling. Cryptology ePrint Archive, Paper 2023/1079, 2023. ↩︎

-

[HSW24] Mathias Hall-Andersen, Mark Simkin, and Benedikt Wagner. FRIDA: Data availability sampling from FRI. Cryptology ePrint Archive, Paper 2024/248, 2024. ↩︎

-

[AHIV17] Scott Ames, Carmit Hazay, Yuval Ishai, and Muthuramakrishnan Venkitasubramaniam. Ligero: Lightweight sublinear arguments without a trusted setup. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, pages 2087–2104, Dallas Texas USA, October 2017. ACM. ↩︎

-

[BBHR18] Eli Ben-Sasson, Iddo Bentov, Yinon Horesh, and Michael Riabzev. Fast Reed–Solomon Interactive Oracle Proofs of Proximity. pages 1–17, 2018. ↩︎